6 AI Trends That Will Impact the IoT Industry in 2024 | SPONSORED

|

Getting your Trinity Audio player ready...

|

While most of the attention around AI in recent years has gone to consumer-focused applications like text generation and image creation tools, businesses are also adopting AI for more practical uses in the physical world. In this article, you will learn about some exciting AI trends to watch in 2024 that are relevant to Industrial IoT workloads.

Data is the engine of AI for IoT

Even if you don’t have immediate plans to adopt some of these technologies, you should start collecting data from your operations in preparation. Historical data for your business forms a foundation for success because you can fine-tune AI models for accuracy in your specific use case.

“Data is the new oil” is a cliche at this point, but having proprietary data on hand will give you much better results compared to starting from scratch with AI tools off the shelf. The bottom line: if you aren’t collecting as much of your business’s data as possible, you’re throwing money away.

For IoT specifically, time series data is often the most common type of data. For historical analysis and real-time monitoring, you’ll want to access data quickly while keeping your storage solution affordable. Using a purpose-built time series database, like InfluxDB, gives you the flexibility to achieve these goals. Many companies in the IoT sector are recognizing the importance of their data and turning to dedicated solutions. Ultimately, general-purpose data stores aren’t designed for the unique requirements of time series data.

Once you have a reliable data collection and storage solution, you can rest easy knowing that you can adopt and integrate new AI tools into your organization and hit the ground running. Now, let’s take a look at some specific AI trends you should watch in the year ahead.

Audio models

Businesses and government organizations already utilize AI models trained to identify audio. SAP created tools that use microphones in factories to predict when machines need routine maintenance or are about to break down. The AI model can look at data points like volume and frequency to determine if something is wrong and suggest preventive action.

In Africa, the World Wildlife Foundation uses audio models to prevent illegal deforestation. During six months in 2023, the WWF prevented 34 illegal logging attempts using AI models trained to identify sounds like chainsaws or heavy vehicles. These models send location-specific alerts to authorities where the sounds are detected.

Vision models

AI vision models for image recognition and classification continue to improve. Many powerful open source models are available and can be fine-tuned with your custom dataset to improve accuracy.

The state of California is using AI to help with the early detection of forest fires by feeding video from over 1,000 cameras across the state to an AI model trained on a custom dataset to identify smoke. This model can alert human workers, prompting them to confirm if a fire has started.

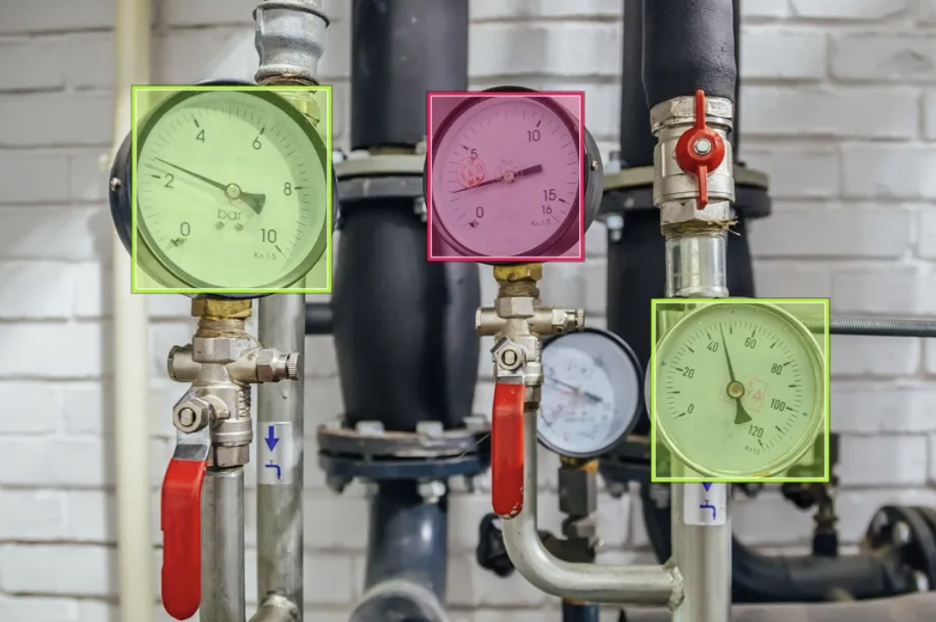

Manufacturers are using AI vision models for detecting product defects on assembly lines, improving worker safety, and monitoring gauges.

AI vision model identifying gauges and extracting the value for monitoring

Source: https://roboflow.com/industries/manufacturing

Time series forecasting models

Time series forecasting is one area where traditional statistical methods continue to be more accurate and far more efficient than newer machine learning techniques. But that trend may finally change in the years ahead.

Researchers and even businesses in production settings like Amazon recognize the benefits of modern deep learning models, especially for data with high dimensionality. This data is challenging to model using traditional statistical methods like ARIMA, which require human experts to tune the models. On the cutting edge, teams are starting to build time series forecasting models using the same transformer architecture made famous by tools like ChatGPT.

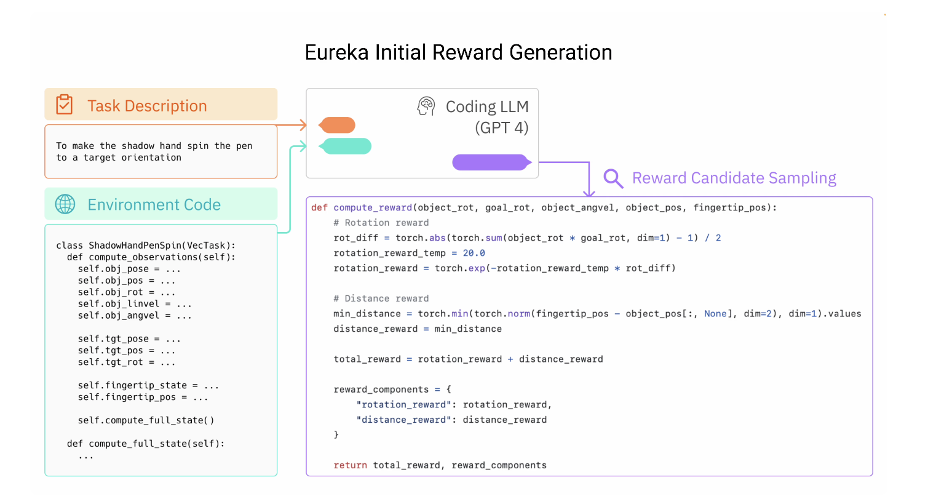

LLM enhanced reinforcement learning for robotics

There have been several breakthroughs in robotics this year thanks to new AI model architectures. Eureka, created by Nvidia and other research teams, uses GPT4 to continually improve the code for reinforcement learning in robotics.

Eureka allows robots to learn new, complex skills without requiring humans to program them. Without the need for manual labor, robotics can be deployed in environments where it would have previously been unaffordable. It also allows robots to be more adaptable to environmental changes that might regularly cause them to fail in their task.

Structured data output

One historical challenge of working with LLMs was situations in which outputs were structured in a format like JSON, enabling the use of responses as inputs for other programs. This allows AI agents to communicate with different tools and perform complex tasks without requiring human intervention.

While there is still no perfect solution to guarantee output structure, different model providers and external tools have been developed to improve the reliability of these models. Some examples:

• OpenAI function calling – OpenAI fine-tuned their models for this specific use case, allowing developers to upload a schema for their responses to match.

• Instructor – Instructor is a Python library that uses Pydantic to make it easier to handle LLM responses with tools like error handling and type hinting to improve developer productivity.

Fine-tuning and Retrieval Augmented Generation

In most business environments, you will have private data that AI models like GPT4 aren’t trained on, making them less accurate for your specific use case. Two solutions to this problem are fine-tuning and Retrieval Augmented Generation (RAG).

Fine-tuning involves modifying a more general pre-trained AI model by giving specific training examples to fit a use case. This customization is crucial in industrial settings, where equipment and processes vary widely between facilities. For example, a fine-tuned model could more accurately predict maintenance needs for a specific type of turbine used in power generation than a generic model that doesn’t have images of that turbine in its training data.

RAG is another strategy to give relevant information to an AI model. RAG is easy to implement because you only provide information relevant to the task at the time you run the model rather than doing additional training. In an IoT environment, you could provide information like historical sensor data, past maintenance records, or manufacturer guidelines to inform its decisions. In the real world, when diagnosing an equipment problem, a RAG model could pull up similar past incidents and their resolutions to suggest the most effective repair strategy. This speeds up problem-solving and helps capture and utilize institutional knowledge, which is often a challenge in large-scale industrial operations.

Wrapping up

AI is a rapidly changing field that might seem abstract right now with minimal real-world use cases, but in the near future, it will impact businesses significantly. Companies that adopt these new technologies will have a huge advantage over slower-moving competitors.

About the author

This article was written by Charles Mahler is a Technical Writer at InfluxData where he creates content to help educate users on the InfluxData and time series data ecosystem. Charles’ background includes working in digital marketing and full-stack software development.

This article was written by Charles Mahler is a Technical Writer at InfluxData where he creates content to help educate users on the InfluxData and time series data ecosystem. Charles’ background includes working in digital marketing and full-stack software development.